The Problem

As sales volume increased larger customers required the company begin using EDI to transmit documents. Manual order entry also introduced errors and was not efficient enough to keep up with order volume. A system was needed for processing EDI documents as well as processing data into the company system from other sources. This system must be more efficient than previous document transmission methods and must be robust.

Project Overview

Requirements

- x12 version 4010 EDI document compliance

- AS2 capability

- Automatic order entry, invoicing and tracking

- User interface to create and interact with documents

The current company software for order entry is QuickBooks. Because QuickBooks has very limited ability to customize data an external database is required to store data for EDI documents. The following software was used to meet the project requirements.

- QODBC Server Edition – Allows software to interact with QuickBooks as an SQL database but has limited database functionality.

- SQL Express – MSSQL server to store data and perform more complex database functions outside of QODBC.

- bots – bots is an opensource EDI document processor.

- Talend – Talend is an opensource ETL software that uses a visual interface to move data and perform advanced data manipulation.

- Tomcat/Java – A Java webserver hosted with Tomcat to allow users to interface with the database and run scripts.

- pyAS2/Gunicorn/nginx – pyAS2 is an opensource AS2 server built with Django. Gunicorn and nginx are used to host the server app in a production environment.

- Selenium – Selenium is an opensource browser automation scripting library built for java and python.

Project Details

The following new processes dramatically increase efficiency and reduce error probability. EDI also adds document accountability since document receipts will be returned.

The final version of this project adds the following processes.

To send an EDI document:

Access Web Server -> Open Pending Document Page (Data will be loaded upon page request) -> select desired data to transmit -> click send

Online Order Importing

Online orders will be read into QuickBooks automatically each work day morning. An action report will be sent out afterwards with order information.

Order Invoicing

Access Web Server -> Open Orders to Invoice (Data will be loaded upon page request) -> Select orders to invoice -> click invoice

3rd Party Warehouse Shipment Confirmations

Shipment is confirmed via EDI -> Shipment confirmation is automatically checked for validity -> Order is automatically invoiced

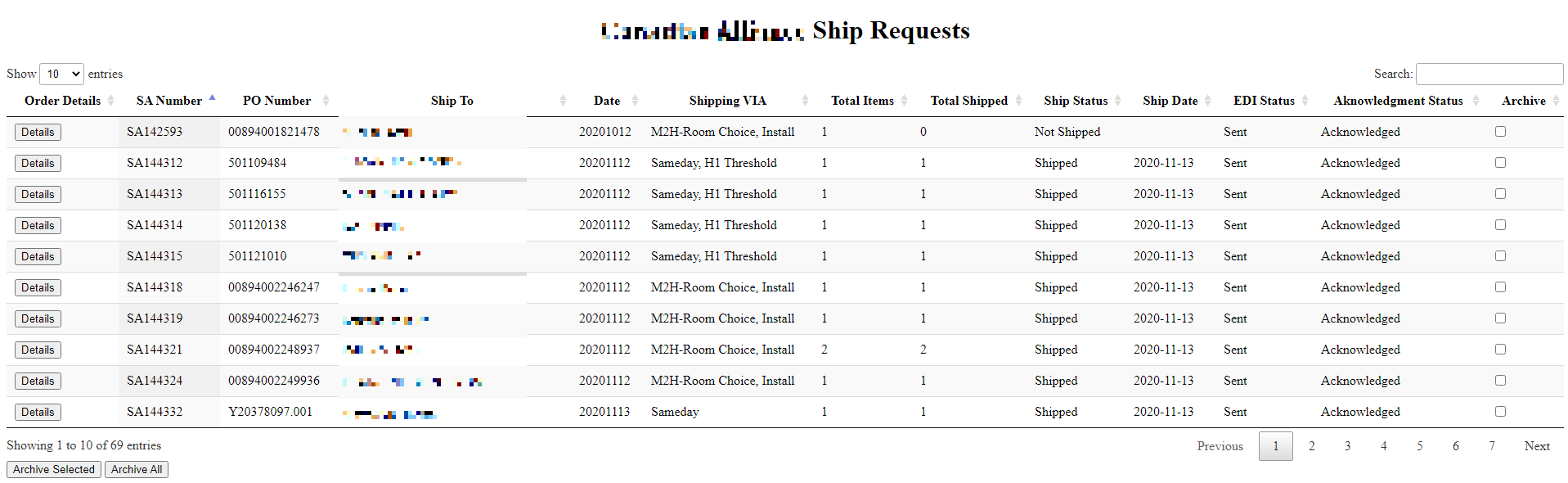

The first partner to integrated with EDI required x12 940 Warehouse Shipping Order requests to be sent and a 945 Warehouse Shipping Advice to be sent back when shipped.

EDI stands for Electronic Data Interchange and works as a standardized data format for business communications. By mapping information to the EDI standards and communicating the mapping to a partner data information can be sent directly between two EDI partner systems. In the x12 EDI transaction set a 997 Functional Acknowledgment will be sent to acknowledge receipt of a document if it is requested by the sender. Each x12 EDI message will be contained in an interchange envelope beginning with an ISA header and ending with an IEA trailer. The different transactions will be contained in a functional group beginning with GS and ending with GE.

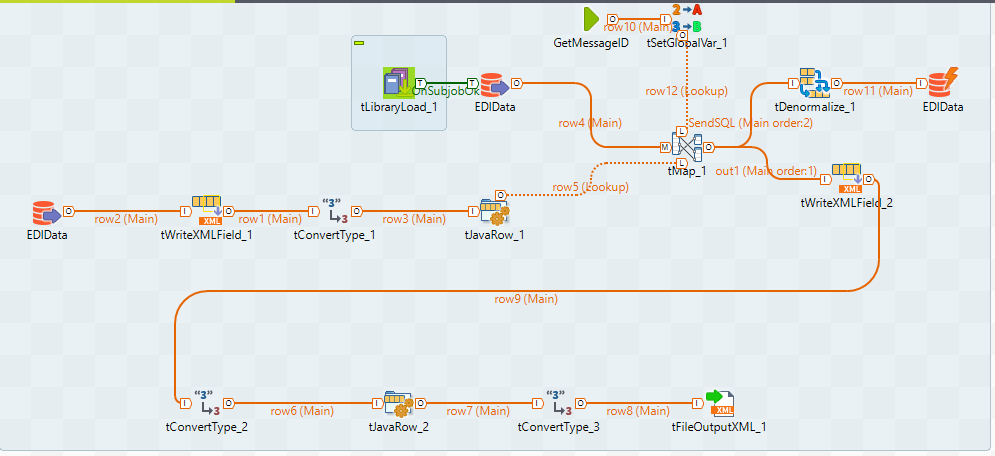

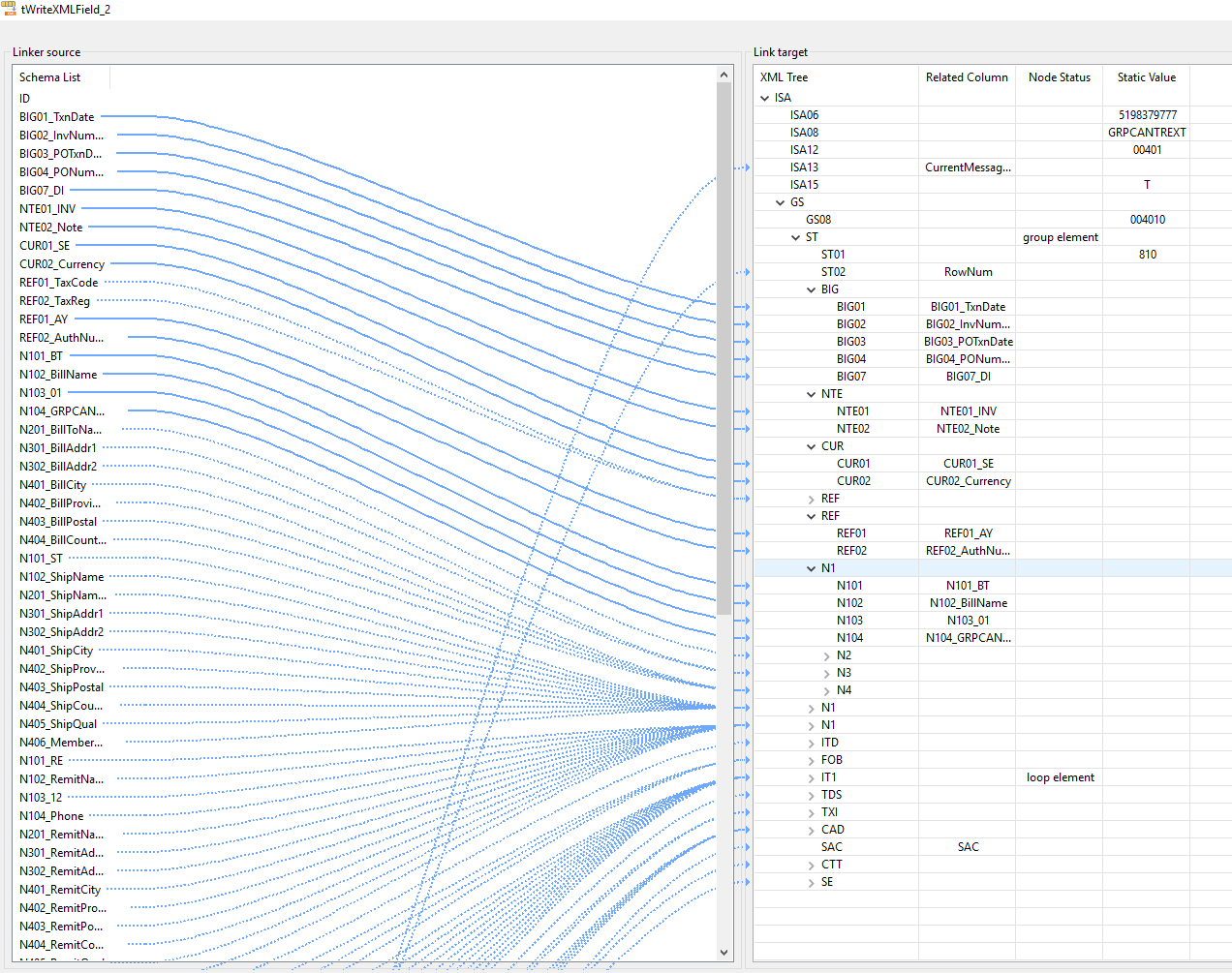

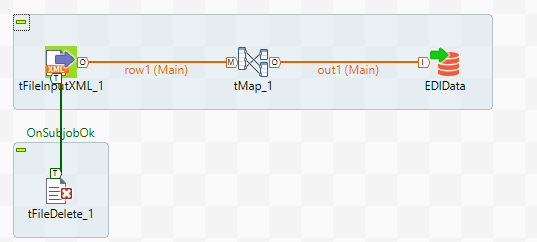

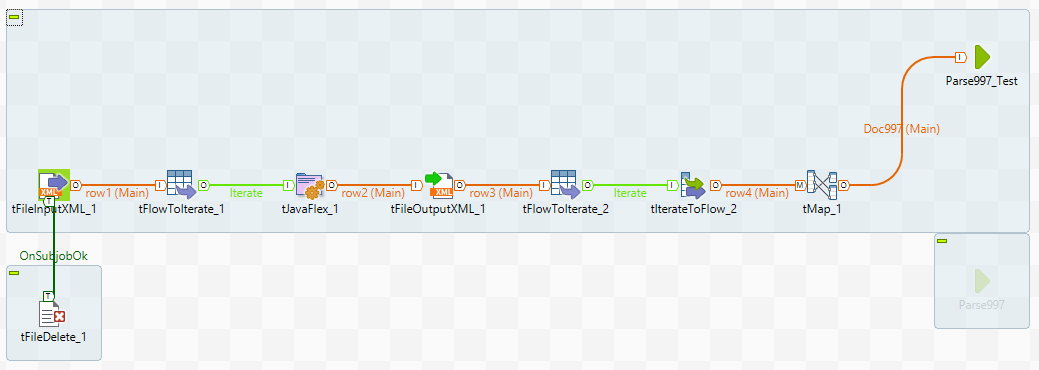

The first step was configuring bots to generate a 940 document and handle 997 response documents automatically. It is possible to read data directly from a database in bots. Rather than perform document logic and mapping in bots straight from the database I decided to use the visual interface and mapping in Talend to transform SQL data into xml. Xml data can be structured and tagged to replicate the structure of an EDI document. Bots can translate this properly tagged and structured xml data straight to EDI. Talend provides a visual interface for mapping which allows changes and error checking to be completed more efficiently.

It is possible to do custom document mapping in bots EDI. This was the first way I tested generating a document. The problem with this method was that the data from QuickBooks had to be transformed into xml before processing and if the document was already being mapped to xml it was redundant to map it twice.

Here is a brief overview of how bots translates:

The data source will be transformed by a mapping script into a node tree. Bots uses a data structure scheme called a grammar that contains the mapping structure and constraints. The bots grammar file for the message type will be used to check the message structure and constraints. Using the grammar file bots will attempt to translate the node tree into the desired message type. A message type must be assigned for the source data and destination data to inform bots what data to expect and how to handle it.

By using the xmlnocheck starting message type bots will assume that the data is structured in the same way as the desired message type and that the xml tags are the names of the nodes. This allows the mapping to take place in Talend by naming the nodes with the grammar name tags and the grammar structure. In the mapping script each xml message will be broken down into individual transaction sets and tagged with the Interchange Control Header information. Bots will take this pool of individual transactions and merge them together when translated using the header information. This means that 10 individual documents can be sent at once and they will be merged into one final document automatically, reducing processing time.

Documents also needed to be tracked to ensure that they are being acknowledged by the trading partner. Bots uses an automatic incrementor for the ISA header that is very robust (Each Interchange Control Number must be unique for each message). I needed an identifier for each message to keep track of it before it is broken apart by bots. I decided to use an ID contained in a SQL table along with a section ID to connect the pre-send data with the post-send data. I created a bots envelope script that works with the mapping to create a temporary spreadsheet with the bots final message ID, pre-send message ID and the section ID. This allows me to track the status of each message. Bots uses a lightweight database to track message data that could be queried to get message information but the spreadsheet method was sufficient.

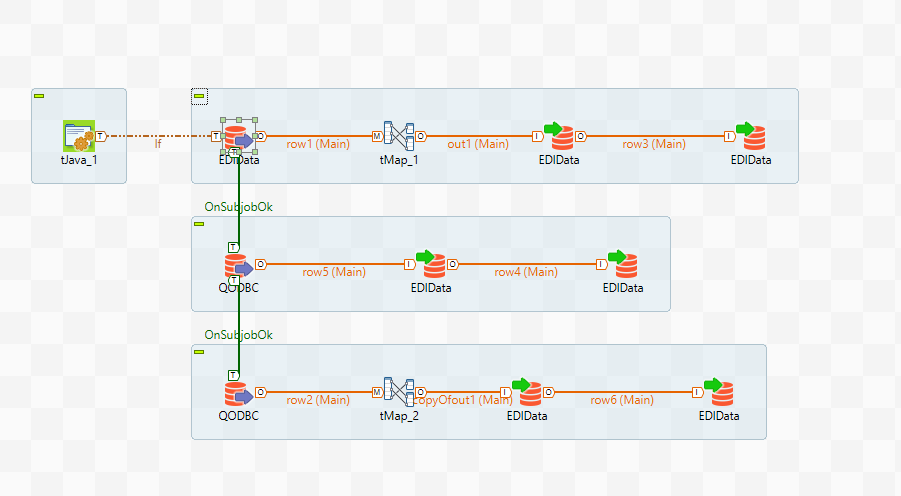

That is how data goes into and out of bots but first the data needs to be generated from QuickBooks. I used Talend to extract information from QODBC (QuickBooks) and perform transformations and checks on the data. QODBC acts as a SQL database for query writing but cannot process anything other than basic queries. To perform complex queries, I extract the basic table information from QODBC and put it in a temporary MSSQL table to perform operations.

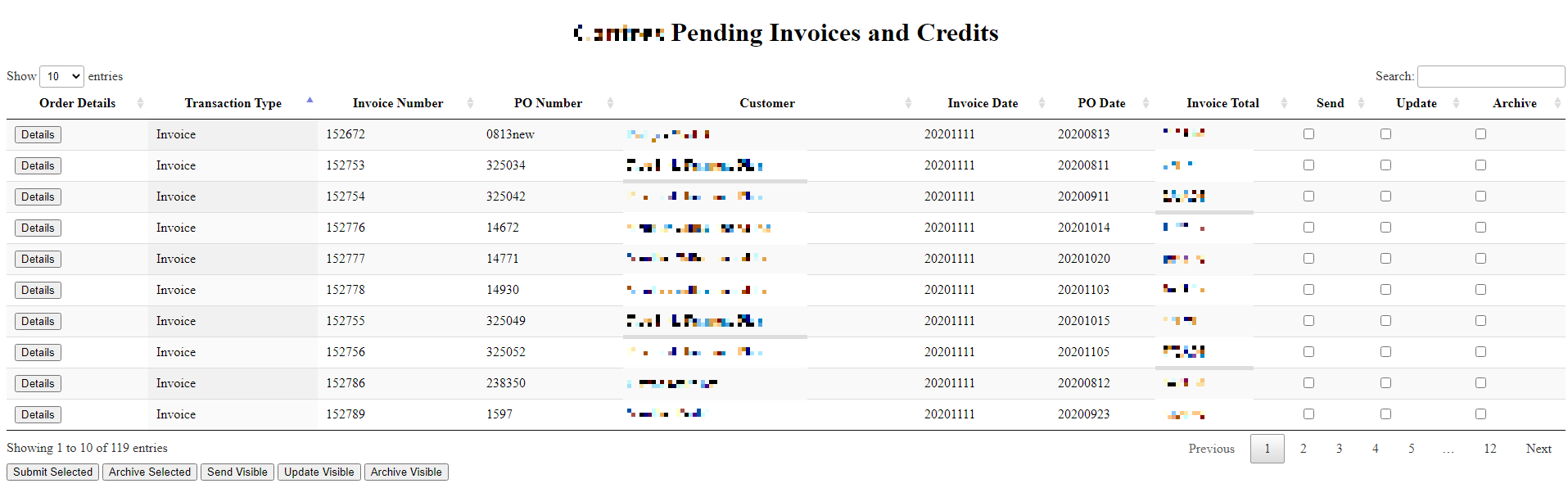

Orders that fit the criteria for a 940 document will be extracted from QODBC and stored in a table. This stored data is viewable in a web interface via Tomcat and can be flagged for sending. After being flagged a Talend script will do the final transformation to xml. At a set interval bots will run and the generated xml file will be processed and sent to the trading partner.

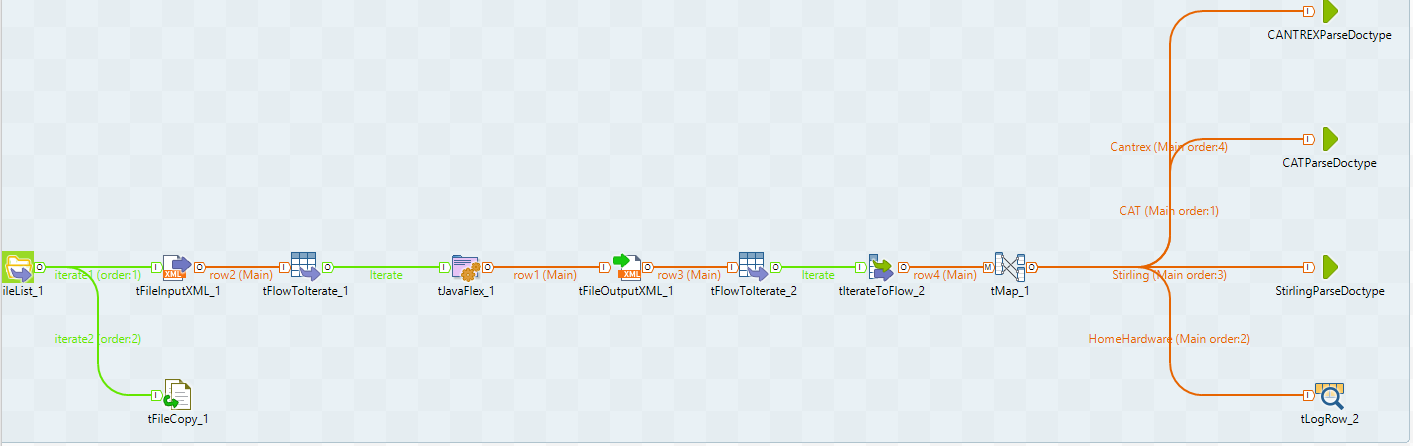

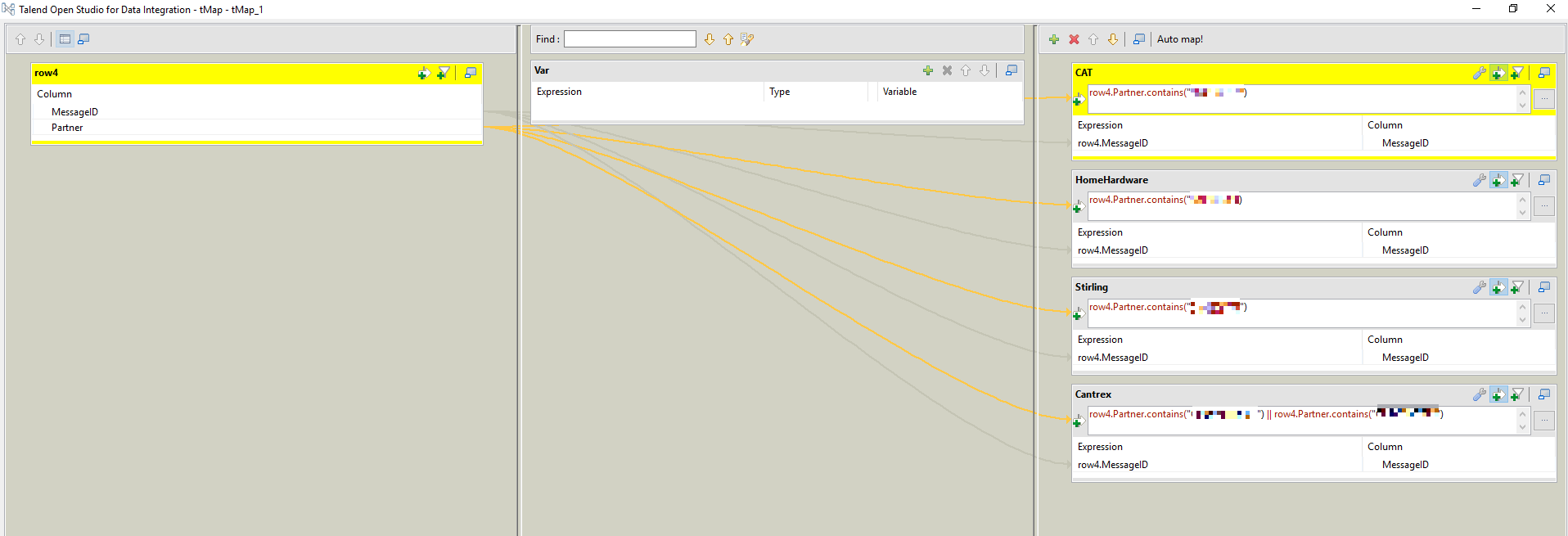

Incoming documents from the trading partners will be translated by bots into xml. Talend will pick up the translated xml and divide the xml into individual Interchange Envelopes if they are combined. These partitioned envelopes will be passed to a partner specific document processor in Talend. The document will be further divided by message type and sent to the partner processor for that specific document.

For example:

A 997 and a 945 document is received from company_x ->

bots will merge the separate documents into one xml document during translation ->

Talend will read this document and send each Interchange Envelope separately to the company_x document parser ->

The company_x parser will check each transaction set in the message and pass that to the document parser. In this case the company_x 997 parser and the company_x 945 parser ->

Any document specific logic will take place in these document parsers.

As documents are generated for bots they will be assigned a temporary Interchange Control Number along with a permanent Transaction Set ID. This data will be stored along with basic message information in a message tracking table. Bots will provide back the final Interchange Control Number which can be used to confirm the message has been sent successfully since it is only provided after an error free translation. When a 997 document is returned by the trading partner it will be parsed in Talend and the message tracking table updated accordingly. Using this system, the status of each message can be tracked from:

Pending – No Interchange Control Number assigned

Sent – Interchange Control Number assigned

Received – 997 parsed with the same Interchange Control Number and Transaction Set ID

For this particular partner the returned 945 documents were integrated into a system for automatically invoicing online orders and tracking them.

Online orders are sent through Commerce Hub, a third-party business network provider. These orders are put in a queue for shipping documents that can be downloaded all at once. This will reset the queued orders. Using this queue system, I created an automation script that reads all the orders in the queue and writes them to QuickBooks via QODBC.

These orders must be tracked in the Commerce Hub web interface once they have been shipped with the invoice number, tracking number and order information. To automate this process, I first created a script that would iterate over a list of orders inputting the order data. Then I created a table for Tracking information and a table for invoice information. By crosschecking this information orders can be confirmed as invoiced and shipped and put in a queue for tracking at the end of the day.

To tie it all together the 945 documents from this partner are checked to make sure that there are no deviations from the expected shipped quantities. If the order has shipped completely it will be automatically invoiced. If a tracking number for that order is already in the system then the order will also be put in the queue to be tracked. This automates the order entry, invoicing and tracking for online retailers.

Another partner required the transmission of EDI documents via AS2.

AS2 is a data exchange protocol. Most documents sent using the AS2 protocol are encrypted and signed by the sender. The receiver will use a paired key to decrypt the message. In AS2 protocol the sender system will initiate the connection with the receiving system. This means that in order to receive messages the receiving system must be continuously listening for message transmissions. Setting up an AS2 server on premise can require significant investment to ensure network security and continuous connection uptime. In most cases it will be more cost effective to use a third-party AS2 provider or host AS2 communication software on a cloud server.

None of the third-party providers I sourced had rates low enough to be a competitive long-term solution. I decided to host pyAS2 on a cloud server. I set up this environment in a Digital Ocean droplet.

In pyAS2 it is possible to modify the AS2 message headers or the document flow as needed. Some partners will require specific information in message headers. I added header fields to reference the message ID and involved partners. To run pyAS2 automatically I created a schedule job that will send pending documents and retry failed documents. PyAS2 refers to the local sender entity as an Organization and the receiving party as a Partner. PyAS2 will create a folder structure automatically with each Organization folder having an inbox for each Partner. Each Partner will also have an outbox for each Organization. By connecting via SFTP documents can be dropped off and picked up from the AS2 server in the respective folders.

Over the course of this project there were specific problems and solutions in each component that I did not put in the project overview.